intro: the setup

welcome, this is the intro to my setup. it covers an overview of the system and how it all interacts. i hope to create future posts with more details about each item.

TLDR; docker + gitlab = plex, sonarr, radarr, and a whole lot of other things to manage all my media.

Applications:

Stats:

i started with plexwatch, which became Tautulli. this app allows you to keep track of history, ips, users, and a few other things. its good details and usually the goto.

next up is varken, its a container (or script) that reads data from sonarr, radarr, Tautulli, unifi, and a few others. it can then aggregate those stats into influxdb, and grafana. its very cool.

the dashboard is clean, and you get to see things in a little better format.

misc notes:

intro conclusion:

overall with FH being the only plex server, and having 24 cores (even older cpu) the system is capable of handling a decent set of streams. the current max i've seen so far is.

Streams: 14 streams (3 direct plays, 1 direct stream, 10 transcodes)

Bandwidth: 60.2 Mbps (LAN: 5.5 Mbps, WAN: 54.6 Mbps)

anyway, hope you enjoyed i'll be getting into more details in other posts.

TLDR; docker + gitlab = plex, sonarr, radarr, and a whole lot of other things to manage all my media.

|

| System Architecture |

Applications:

- Sonarr

- Radarr

- Lidarr

- Resilio

- Transmission

- Varken

- Plex

- Tautulli

- Netdata

- Ombi

- Organizr

- ZeroTier

- Gitlab

- Docker

- Elkar

- NextCloud

- Mattermost

Automation:

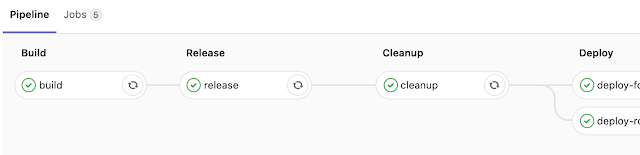

it took a long time to get to the point i'm at right now, but to sum it up, i use gitlab ci/cd manage the containers on the various servers. this makes redeployment, upgrades, and changes very easy. i've found that using the linuxserver containers are the best as they are based on alpine, and you can set the userid that everything is ran as. i recommend you build your own alpine based on their base image, and then build your application containers off of that.

either by manual add, or requests, everything starts with sonarr, and radarr, this is housed on RH, its responsibility is to manage new media as well as hosting half the media array, in this case all movies. it also has an array called queue, it's only a few TB, but it's storage for incoming data from the downloaders. RH runs resilio and has a handful of folders that are synced between the downloaders.

ZO, and FO are the downloaders, they run transmission and resilio. the folder structure looks something like this.

- FOwatchQueue

- FOmovieQueue

- FOtvQueue

these folders are synced between FO and RH. in most cases FO is used for movies, and large season packs. allows longer seed times. here an example of what a movie flow looks like.

Radarr on RH drops a torrent in a blackhole folder FOwatchQueue. FO picks up the file once its synced over via resilio (using zerotier). once its downloaded a post process script runs that hardlinks it to the queue folder. eventually its synced between FO and RH. i wrote a custom script, but it used to be a bash script, it looks for files in that folder and copies them to the processing dirs of radarr. now it's an overly complicated library that also handles stats (that was before i found varken). once radarr picks it up, it drops it into the final destination which plex then picks up.

the same goes for most content, over the years i've rewritten some code here and there. for example the downloader post processing script is a bash script that calls a perl script, i then make more informed decisions on content type. I have a 4k library locally because yeah bandwidth.

Organizing it all:

after searching and trying different things i settled on organizr. this little container is a portal for all things media related.

Stats:

i started with plexwatch, which became Tautulli. this app allows you to keep track of history, ips, users, and a few other things. its good details and usually the goto.

next up is varken, its a container (or script) that reads data from sonarr, radarr, Tautulli, unifi, and a few others. it can then aggregate those stats into influxdb, and grafana. its very cool.

the dashboard is clean, and you get to see things in a little better format.

misc notes:

- zerotier is encrypted, turn off encryption in resilio it will be much faster, also set static hosts and don't use relays or trackers

- bind all containers to the zerotier address

- mattermost, slack, discord or something is useful so you can keep track of flows.

- put the plex internal library on SSD, the skip images can be moved to spinning.

- set stream limits per user (curbs abuse)

- run raid6 if you can, allows for disk failures

- create the same user on all machines, and make sure the containers all run as that user

intro conclusion:

overall with FH being the only plex server, and having 24 cores (even older cpu) the system is capable of handling a decent set of streams. the current max i've seen so far is.

Streams: 14 streams (3 direct plays, 1 direct stream, 10 transcodes)

Bandwidth: 60.2 Mbps (LAN: 5.5 Mbps, WAN: 54.6 Mbps)

anyway, hope you enjoyed i'll be getting into more details in other posts.

Comments

Post a Comment